Interoperability Initiative

Conversational AI That Works Like the Web

The goal of the Open Voice Network Interoperability Initiative is to enable voice and conversational AI to work like the web. Before us is a future where users can freely find and make use of any conversational assistant and language model that addresses their goals, just as they do now with web pages.

Our path toward achieving this goal is to define, develop, and promote standards – beginning with an open, universal application programming interface (API) – which will enable any conversational assistant that follows the standards to freely interoperate with other standards-using assistants to connect, communicate, and transfer content and control across assistants, platforms, and language models.

We encourage you to learn more about our interoperability work by following the links below, where you can learn about our approach, process, future work, and how to get involved.

Why Interoperability?

This is about access, opportunity, and commercial freedom in the emerging world of conversational artificial intelligence.

Today’s conversational assistants operate largely in isolation. When a user is talking to an assistant and needs some information that it doesn’t have, the user is forced, in the best case, to open another assistant and start over. Sometimes finding another assistant is even impossible because the right assistant only works on a device the user doesn’t have.

This situation is in sharp contrast to the World Wide Web, where web browsers allow users to find, and freely visit, billions of web pages. All of these billions of web pages are, for the most part, hosted by very different organizations. This is possible because web pages are based on standards that all web browsers understand.

The Open Voice Network envisions a similar ecosystem of standards-based, collaborating, conversational assistants which would be much more similar to the web. Assistants using these standards would allow users to easily switch between conversational assistants – and their language models – when they need different information, just as they do when navigating among web pages.

This is the user-centric future of conversational assistance.

Not only are these kinds of standards beneficial to users, but they also benefit organizations that would otherwise have to develop assistants for every device their customers might have. These standards will make it possible for conversational assistants to interoperate.

Interoperable conversational assistants will form an open system in which connections are limited only by the outer bounds of our digital world. This promises to unleash significant and distributed economic value. Let’s be reminded of Metcalfe’s Law (postulated by telecommunications pioneer Robert Metcalfe in 1983), which states that the financial value of a network is proportional to the square of connected users of the system. It’s as relevant to the future of conversational assistance as it has been to the internet.

The Open Voice Network is defining a public API that will enable every conversational assistant that has implemented this API to connect, communicate, and transfer content and control with any other conversational assistant that has also adopted the API.

An open, interoperable system of voice assistants and language models promises a number of important benefits:

- For users, there will be access to more sources, more ideas, more content that goes beyond the realm (or accuracy) of general-purpose assistants. And, there’s just more convenience; users won’t be asked to turn off one assistant to pursue knowledge through another.

- In the world of the internet, do we shut down browsers when we seek a new web destination?

- In the world of the internet, do we shut down browsers when we seek a new web destination?

- For innovators and entrepreneurs, there will be more opportunity for new products and services – niche-use, add-ons, or industry-specific.

- For enterprise users, there will be

- Increased investment efficiency, as one conversational assistant will be able to communicate with any other assistant – regardless of platform. Build once, use everywhere. No longer will brands be asked to develop separate applications, operating on separate platforms.

- Increased vendor choice – and the opportunity to develop best-of-breed or best-partner solutions.

- Direct connection with customers and other stakeholders. No longer will tech platforms stand between brands and their customers, intercepting data and relationships along the way.

- Overall, open systems drive innovation, competition, and lower costs. Open systems benefit users – especially organizational and enterprise users.

What is a Conversational Assistant?

Conversational assistants are software programs, based on AI, that assist people in accomplishing tasks by means of a natural spoken or text conversation to and from a computer. These assistants are often called chatbots (typed conversation) and voicebots (spoken conversation). Examples are general-purpose assistants like Amazon’s Alexa, Google Assistant, and Apple’s Siri, as well as enterprise assistants hosted by organizations like businesses and governments. Enterprise assistants help customers and citizens get information and perform transactions specific to the organization.

Looking forward, we can expect the recent emergence of generative artificial intelligence and trained language models to drive substantial growth in the use and value of chat and voice assistance. Voice-enabling artificial intelligence will be the interface to language models large and domain-specific. It comes as no surprise that, as of mid-year 2023, Google and Amazon were said to be developing “supercharged” assistants, as the platform/distribution of Home and Alexa is married to the latest large language model technology.

The OVON Approach to Interoperability

Our approach to the interoperability of conversational assistants is based upon a definition of the core patterns of conversational assistant collaboration and the messages required for inter-assistant communication.

The Architectural Patterns

OVON has identified several standard patterns through which conversational assistants interact. These describe how the assistants collaborate and the resulting user experience.

To describe the patterns, we’ll use two assistants – A ad B. For our purposes here, the user will initiate the conversation through assistant A via an endpoint which provides microphone and speaker capabilities. Keep in mind there could be additional agents involved in the conversation.

To illustrate the patterns, we’ll describe a sequence where the user wishes to know the weather forecast in Hamburg.

The breakthrough here is Pattern 4 – Delegation.

Pattern 1 – Native: Internal services fulfill the user request. Assistant A may use internal services or programmatic interfaces to fulfill a user request, without using the services of another conversational agent. The user asks Assistant A, “Do I need my umbrella?”; Assistant A interprets the request – consults an internal or external service known only to the publisher of Assistant A – vocalizes the answer to the user. There is neither interaction nor interoperability with another conversational assistant in this pattern; users are limited to interaction with one ecosystem of assistants. It’s what happens in a proprietary, closed, “walled garden” platform. It is outside OVON scope.

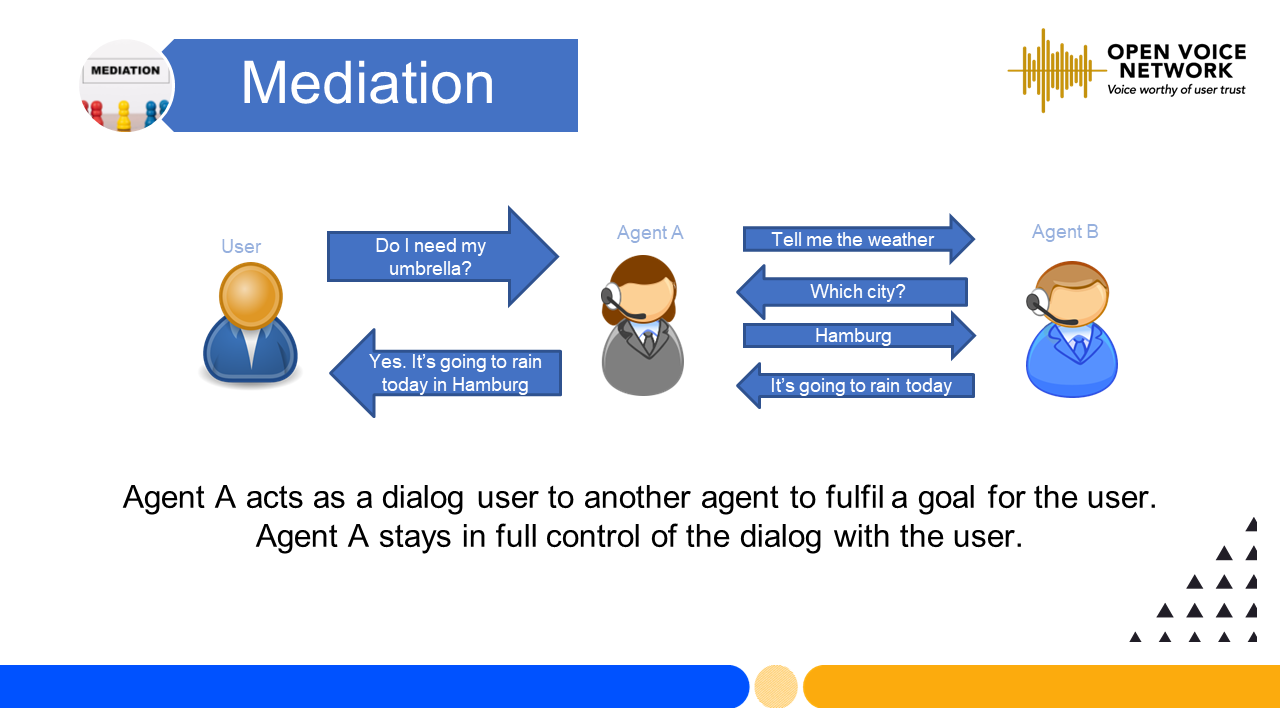

Pattern 2 – Mediation: Assistant A gets behind-the-scenes help from Assistant B. Assistant A, acting as a user, has a conversation with Assistant B behind the scenes using dialog – semantic or linguistic – interfaces to achieve a goal and return to the user. Assistant B does not directly interact with the user, interpret user utterances, or formulate output responses. Assistant B could potentially be a human! The user of Assistant A might or might not know that this is happening.

Figure 1 – Mediation, illustrated: the user asks Assistant A, “Do I need my umbrella?”; Assistant A interprets the request – decides that it cannot fulfill the request itself – identifies and locates an external source for obtaining the response (Assistant B) – holds a private conversation with Assistant B – then Assistant A vocalizes the response to the user.

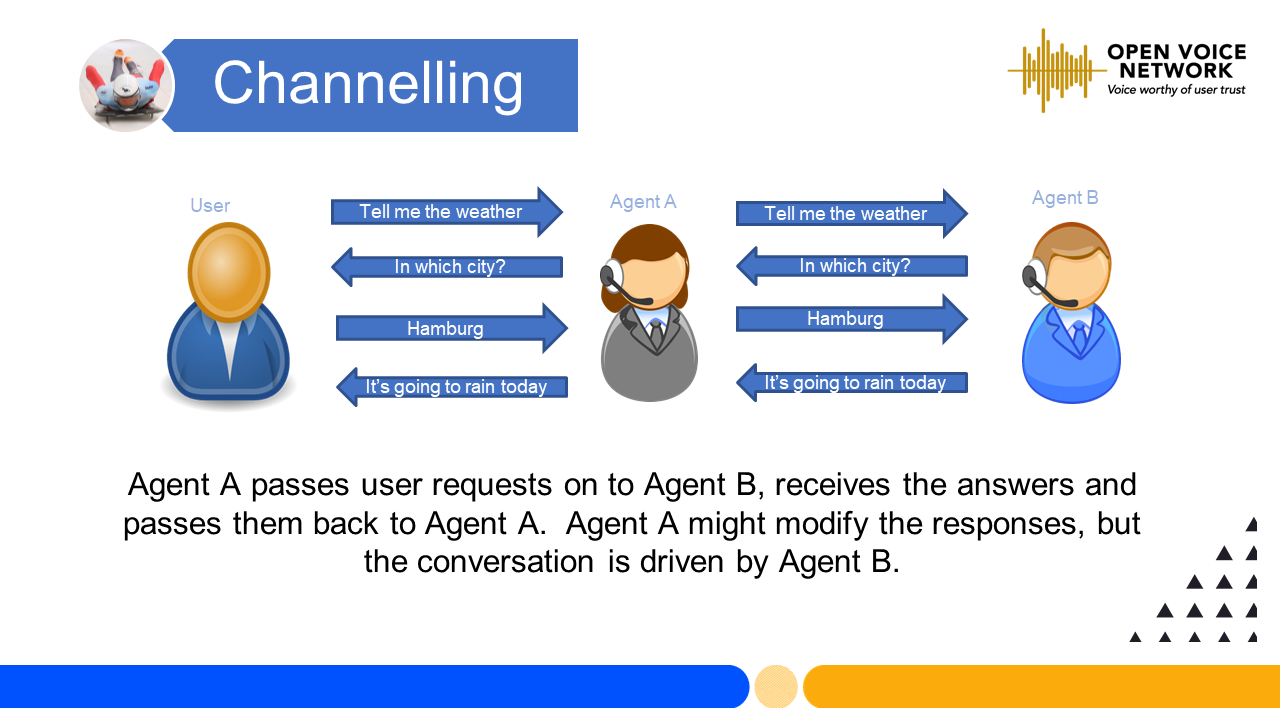

Pattern 3 – Channeling: Assistant B provides dialog services to Assistant A – but Agent A maintains the user interface. Assistant B provides dialog services to Assistant A to achieve a task. The interaction could be single- or multi-turn. Assistant B interprets utterances and formulates utterances, but Assistant A may modify the “character” of the utterances delivered to the user. The user might or might not know that this is happening.

Figure 2 – Channeling, illustrated: the user asks Assistant A, “Do I need my umbrella?”; Assistant A interprets the request – decides that it cannot fulfill the request itself – identifies and locates an external source for obtaining the response (Assistant B). Assistant B then reinterprets the user request if necessary – formulates questions or ongoing responses to Assistant A which Assistant A may modify or enhance when interacting with the user – responds to subsequent user inputs that are passed to it via Assistant A. Of note: Assistant A remains in control of the dialog throughout the process.

One situation where channeling might be used is when Assistant A and Assistant B use different natural languages (such as English and German). Assistant A is responsible for translating requests and responses from Assistant B.

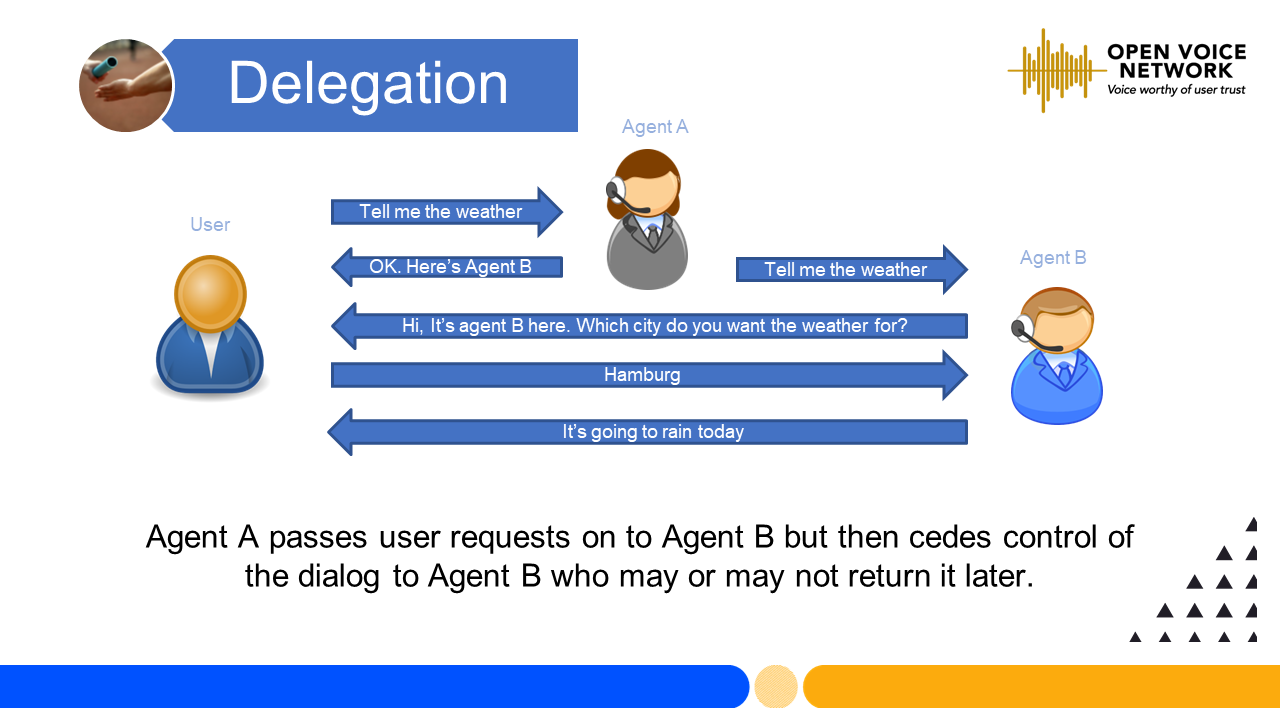

Pattern 4 – Delegation: Assistant A passes control, the dialog, and context and history to Assistant B. Assistant A passes control and management of the dialog to Assistant B, along with a negotiated amount of context and dialog history. It’s similar to a transfer from one call center agent to another.

For enterprises and organizations, delegation is of critical importance – as it will enable customers to connect from an initiating assistant directly to an assistant providing a full brand experience. Delegation means no mediation by a third party; it also prevents the claiming of interaction data by initiating platforms.

Figure 3 – Delegation, illustrated: The user asks Assistant A, “Tell me the weather.”; Assistant A interprets the request – decides that it cannot fulfill the request itself – identifies and locates an external source for obtaining the response (Assistant B) – may notify the user that further interaction will be managed by Assistant B. Assistant B then reinterprets the user request – continues the dialog with the user – vocalizes the response to the user. At the end of the exchange, Assistant B remains in control of further dialog.

Please see our publication, Interoperability Architectural Patterns – Initial Thoughts, for more details.

Standard Message Formats – the Key to Interoperability

Standard message formats are key to inter-assistant interoperability because they convey the information that assistants need when taking over a conversation with a user through one of the patterns listed above.

We are working on two message specifications – the Message Envelope and Dialog Events. We provide examples formatted in JSON, right now, but other formats may be supported in the future.

Message Envelope

The message envelope contains information such as a conversation ID, timestamp, speaker ID, conversation history, and dialog events, as described below.

Dialog Events

Dialog events are linguistic events exchanged between users and assistants. They include actual utterances (spoken or text) and metadata such as language, encoding, confidence, and alternatives. The same format is used for both speaker and assistant utterances.

Please see our publication, Interoperable Dialog Event Object Specification Version 1.0, for more details.

Examples of JSON-formatted messages can be seen in our GitHub repository.

What About Proprietary Protocols?

Note that there is no expectation that adopting these standard patterns and messages would prevent existing conversational assistants from continuing to support native or proprietary communication protocols in addition to the standards described above. The standard patterns and messages can coexist with proprietary or legacy protocols as needed in any given system. Systems that need to communicate with each other using proprietary protocols can still do so, but if they need to communicate with standards-based systems, they can use the standards with those systems.

OVON’s Standards Development Process

We believe that standard protocols are required for interoperability among conversational assistants from different vendors. Standards should be developed and maintained in an open, communal, transparent, and participatory way, and that is accessible to everyone, resulting in a lightweight approach.

The OVON approach to developing interoperability protocols for conversational assistants consists of several steps:

- Collect and analyze case studies of interoperability of conversational assistants.

- Publish requirements and specifications for review by everyone. We have published the following documents:

– Interoperability of Conversational Assistants – 22 September, 2020

– Interoperability Architectural Patterns – Initial Thoughts – 1 December, 2022

– Interoperable Dialog Packet Requirements – 1 December, 2022

– Interoperable Dialog Event Object Specification Version 1.0 – 9 June, 2023

– Envelope Specification – Coming soon - Share current and planned work in open webinars and demonstrations:

– Interoperability of Conversational Assistants – 24 August, 2022

– Interoperability Protocols for Conversational Assistants – 22 March, 2023

– Dialog Events – Negotiation and Delegation Protocols for Collaborating Conversational Assistants – 15 June, 2023

– The Message Envelope & Universal API for Conversational Interoperability – 20 September, 2023 - Repository of documents and code in a common repository available to all. All of our experiential code is available on GitHub.

- Encourage developer involvement. We have partnered with external developers who validate our specifications by implementing and testing them. We are currently working with the government of Estonia on implementing OVON protocols in their Bürokratt government information system.

Future Work: The Open Voice Network Roadmap

OVON will investigate issues including the following. This list may be extended to additional issues that we uncover during our current work.

Discovery and Location of Conversational Agents

We plan to investigate how users and conversational assistants can discover and locate conversational agents.

Useful information for discovering, locating, and accessing interoperational conversational assistants includes:

- Metadata describing the use and capabilities of the conversational assistant.

- Universal Resource Locator, URL, which contains the name or location of the conversational assistant.

- Instructions for accessing the conversational assistant.

Human users could find this information in any of several sources, including:

- Social media

- Websites associated with each interoperational conversational assistant

- A universal repository in which interoperational conversational assistants are registered, similar to the Domain Name System (DNS) for the Internet.

Security and Privacy Issues

We also plan to investigate security and privacy issues, including:

- Encryption of messages among interoperable conversation assistants.

- Establishing trust between two interoperable conversational assistants before they begin to interact.

- Establishing policies for sharing data, including how to:

- establish ownership of new and derived data

- establish rights for users (both human and non-human assistants) to access data

- Support for the principles established by the Open Voice Network Trustmark Initiative.

Get Involved

– Click here to learn how to participate.

– Click here to browse our volunteer opportunities.

FAQs

No. In fact, we believe that it will increase the need for interoperability.

Generative AI is a type of artificial intelligence system that is trained on massively large amounts of text, and can understand, summarize, generate, and predict new content. Examples of generative AI systems include OpenAI’s ChatGPT and Google’s Bard.

No one conversational assistant will do it all, and no one generative AI system will do it all. Generic systems do not know (and users don’t want them to know!) sensitive information such as your address, social security number, or salary. But a conversational assistant from your bank, university, or doctor WILL know your sensitive information!

Depending upon what they want to do, users will move back and forth between assistants that don’t know their sensitive information and domain- and brand-specific assistants that do know your sensitive information. Users’ lives will be simplified if all assistants can interoperate.

- Simplicity: Developers need to learn how to use and maintain only the single universal interface rather than multiple APIs for each service.

- Consistency: the single universal interface cannot be changed by any service provider.

- Scalability: the single universal interface can handle a wider range of requests than any vendor-specific API.

Existing conversational assistants will need to be able to interpret and generate the standard set of OVON messages whenever they need to communicate with other OVON-compliant assistants. The internal operations of existing assistants will not need to be modified.